Michael Posa

August 22, 2024

The speed, strength, and complexity of robotic platforms has dramatically advanced in recent years, with growing commercial applications in specialized areas like exploration, monitoring, and custom warehouse environments. However, the basic promise of multi-functional assistive robots, machines which perform meaningful tasks in the home or workplace, remains unfulfilled. I believe that, despite growing commercial investment, fundamental breakthroughs are still required to enable robots to enter unstructured environments and perform useful tasks with speed and surety remotely approaching that of humans. Limitations in our current ability to understand and control the interaction between robot and world, a basic phenomenon central to most robotic tasks, remain a critical roadblock toward more widespread deployment of robots.

While methods based in large data or expert imitation show substantial promise, deployment of multi-purpose robots will not be achieved by data alone, but instead by its careful integration with physically and mathematically consistent models. Thus my research group focuses on developing algorithms for learning and control to enable high-performance in novel or data-scarce regimes, for example when a robot must generalize its training to new tasks or distributions. In particular, I aim to create autonomous robots which safely act with speed and dexterity, particularly when they must reach out and proactively touch their environments. For example, when a robot enters a scene and encounters a set of previously unseen objects, it might first learn, over the course of seconds to a few minutes, about these objects, and then execute some real-time control strategy. To achieve this goal, and in contrast with presently prevailing approaches, my research offers unique insight into contact modeling that is simultaneously formally grounded, computationally effective, and empirically accurate.

These mathematical insights, arising from the structure of multi-contact dynamics, enable data-efficient learning and high-performance control for both legged locomotion and dexterous manipulation, where my group has pioneered new methods for theoretical and computational understanding. We have achieved the first demonstration of provably-stable reactive tactile feedback (Aydinoglu2020) (Aydinoglu2021b) and contact-rich model predictive control for manipulation (Aydinoglu2022) (Aydinoglu2024) (Huang2024) (Yang2024), as well dynamic and robust model-based walking, running and jumping on the Cassie underactuated biped (Yang2021) (Yang2023) (Acosta2023). Beyond control, my group has developed a theoretical and computational understanding of the multi-impact events ubiquitous in locomotion and manipulation (Halm2018) (Halm2019) (Halm2023). These formal foundations have implications for both algorithm design and empirical practice. For example, establishing the ineffectiveness of unstructured learning for contact (Parmar2021) (Bianchini2022) has led to a breakthrough class of implicit learning strategies with orders of magnitude better data-efficiency when identifying models of contact-rich robotics (Pfrommer2020) (Jin2022) (Bianchini2023), and leading to principled reduced-order approximations for legged locomotion and control (Chen2020) (Chen2023b) (Chen2024) (Jin2024) (Bui2024) that bridge the gap between complex, but accurate, models and those suitable for real-time planning and control.

My group’s emphasis is on addressing the core, underlying challenges in robotics that prevent the robots of today from being deployed into the homes and workplaces of the broader community. We consistently seek out research topics bearing on important but relatively neglected settings where we have unique insights to offer, addressing questions which would remain unanswered if not for our efforts.

Algorithmic advances in control with contact

Robotic manipulators, while fairly capable at grasping objects, remain limited when performing general acts of dexterous or in-hand manipulation. To achieve human-like performance, robots must become more adapt at quickly reacting as objects shift and slide (perhaps intentionally) in their grasp. While learned policies function well within their training distribution, they struggle to generalize; however, model-based control has traditionally been unable to handle the hybrid aspects of multi-contact manipulation, where an algorithm must also decide when to initiate or break contact, and when to stick or when to slide. These discrete choices are critical, as modeling error or disturbances can perturb any pre-planned or nominal contact sequence, requiring real-time decision making to adjust or adapt. My group has pioneered the first model-based control algorithms capable of dynamic, multi-contact dexterous manipulation, which I see as a key building block for generalizable, multi-purpose skills in either model-based or learned settings.

Provably stable reactive tactile feedback via semi-definite programming

With accurate and spatially dense tactile sensors becoming widely available, it is imperative that the field learn to deploy these rich information sources to enable dexterous manipulation. Biological studies, for example, clearly demonstrate the critical role played by tactile sensing for human manipulation. Despite this, current feedback and planning approaches often compress these measurements to binary signals: used to identify contact and switch between control modes. Such approaches are brittle and difficult to implement, requiring rapid detection and switching. In contrast, my group has leveraged tactile sensing within reactive-style control policies; by directly operating on force measurements, we synthesized tactile-feedback controllers which are provably stabilizing for contact-rich problems (Aydinoglu2020) (Aydinoglu2021b). This work was an honorable mention for the annual IEEE Transactions on Robotics King-Sun Fu Memorial Best Paper Award in 2022 and a finalist for Annual Best Paper Award given by the IEEE-RAS Technical Committee on Model-Based Optimization for Robotics in 2021.

Real-time multi-contact MPC

The controllers above, while provably stable, require seconds to synthesize before they can be deployed for feedback. To adapt to new or constantly changing tasks, my group has made breakthrough advancements in real-time feedback. While linearization has led to effective policies for smooth systems, like the ubiquitous Linear Quadratic Regulator (LQR), no such analogue exists for multi-contact hybrid dynamics.

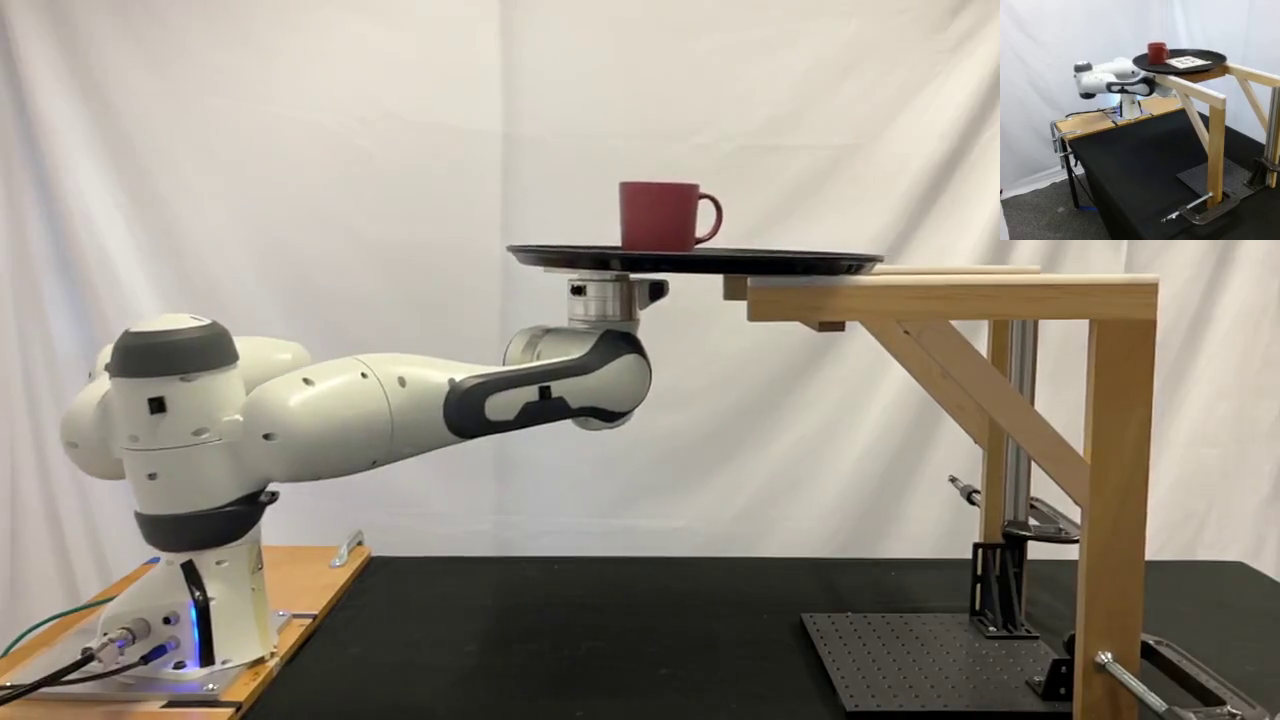

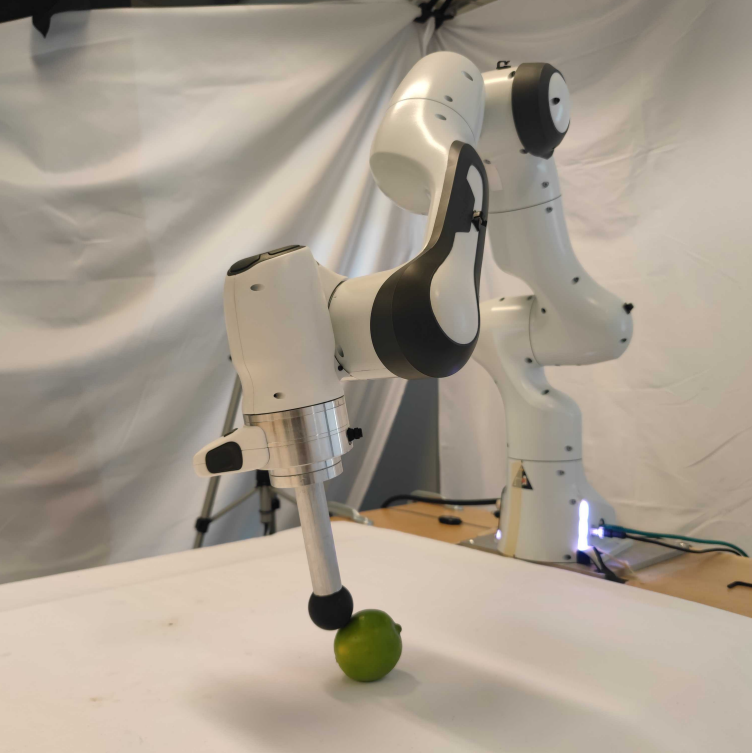

We have developed a new algorithm for real-time hybrid MPC, tailored to multi-contact systems (Aydinoglu2022) (Aydinoglu2024). This approach exploits the distributed nature of the alternating direction method of multipliers (ADMM) and effectively parallelizes the most difficult aspect of the control problem, reasoning over contact events. By leveraging non-smooth structures, we open new avenues for the use of differentiable algorithms to optimize over non-smooth dynamics without the inaccuracies induced by smoothing or approximations common in other methods. Experimental validation of contact-implicit MPC algorithms is extremely rare, where success in simulation may not transfer easily to real systems, and our work has demonstrated unique capabilities in real-time dynamic manipulation, including the ability to actively modulate stick-slip for on-palm dexterity (Yang2024). The algorithm, and its variations, have been evaluated on two different hardware setups across a range of tasks, including use of a Franka Emika Panda arm interacting with objects while frequently making and breaking contact. The initial publication in this line of work (Aydinoglu2022) was a finalist for Outstanding Dynamics and Control Paper Award at ICRA 2022, and our most recent results received the Outstanding Student Paper Award at RSS 2024 (Yang2024). A key limitation of our approach, and of any model-based method, is the need for a model. Accordingly, we have also combined our MPC with multi-contact model learning, achieving the first online-adaptive control strategy that can rapidly adjust to inaccuracies in the given model of the contact dynamics (Huang2024).

Impact-invariant running and jumping

During many dynamic motions, like when a legged robot runs or jumps, impact with the environment results in large contact forces and rapid changes in velocity. Over brief periods, measuring and applying feedback to these velocities is challenging, further complicated by uncertainty in the impact model and impact timing. However, effective feedback is also critical: poor control choices during these windows commonly leads to instability. Prior attempts to either avoid impacts, or to treat them with standard control techniques, are limited in their dynamic capability. My group developed an impact-invariant control law, provably robust to uncertainties during impact events while maintaining as much control authority as possible (Yang2021) (Yang2023). This work enabled the Cassie bipedal robot to jump onto platforms 40 cm in height and to run stably, the first example of model-based running on the widely used Cassie bipedal platform.

Formally grounded, data-efficient contact modeling

To enable dynamic and robust contact-driven robotics, it is necessary to develop methods capable of rapidly adapting to novel tasks and environments, either through accurate, physics-based models or data-driven methods which require minimal exploration time. A key challenge lies in the fundamental conflict between standard assumptions in algorithmic control and learning and the frictional forces and impact events at the heart of contact-driven robotics. Specifically, when a rigid (or near-rigid) robot collides with an object, impact forces resolve over a few milliseconds, leading to hybrid jumps, and Coulombic friction describes a non-smooth relationship between velocity and tangential forces.

Theoretical and algorithmic physics-based modeling

Modeling multi-contact dynamics poses basic theoretical challenges: models are often ill-posed, leading to paradoxical settings without solutions, or those where multiple solutions are equally plausible. Our group has developed new mathematical theory for describing the challenges in quasistatic modeling of manipulation (Halm2018), and has created the first theoretical and algorithmic approaches to describing and computing the possible outcomes resulting from multi-impact events, ubiquitous in locomotion and manipulation (Halm2019) (Halm2023).

Data-driven modeling

Physics-based approaches to modeling require system identification or an \textit{a priori} understanding of the robot and world. However, it can be difficult to capture the geometric and surface properties required for such models. As a result, machine learning approaches to manipulation, including modeling and reinforcement learning, have rapidly grown in popularity; despite this, common approaches do not mesh well with the non-smooth physics, limiting their accuracy and data efficiency. My group has pushed both a deeper, grounded understanding of these challenges and has developed novel, physics-based methods for achieving orders of magnitude better data efficiency. The structured use of non-smooth physics in machine learning has, outside of our work, largely been ignored by standard methods in both robotics and existing physics-inspired learning techniques.

Understanding structural challenges. Standard methods for modeling with deep neural networks were originally developed for smooth processes; their use in contact-driven robotics exposes a fundamental mismatch between the biases of learning algorithms with the underlying stiff dynamics that arise during contact. My group has led investigations into these challenges, providing substantial empirical (Parmar2021) (Acosta2022) and theoretical (Bianchini2022) evidence that applying black-box learning to contact-rich robotics is doomed to poor data efficiency.

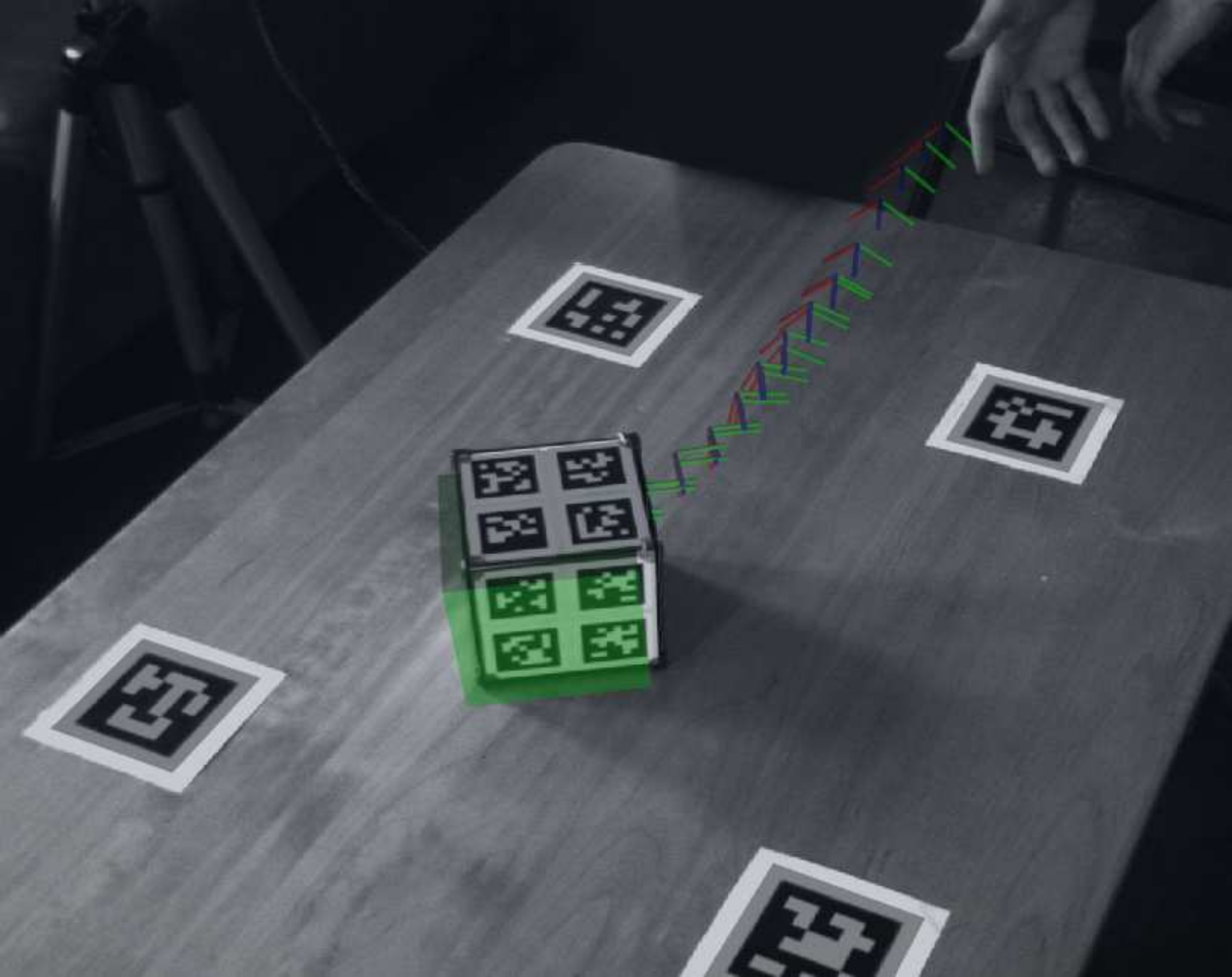

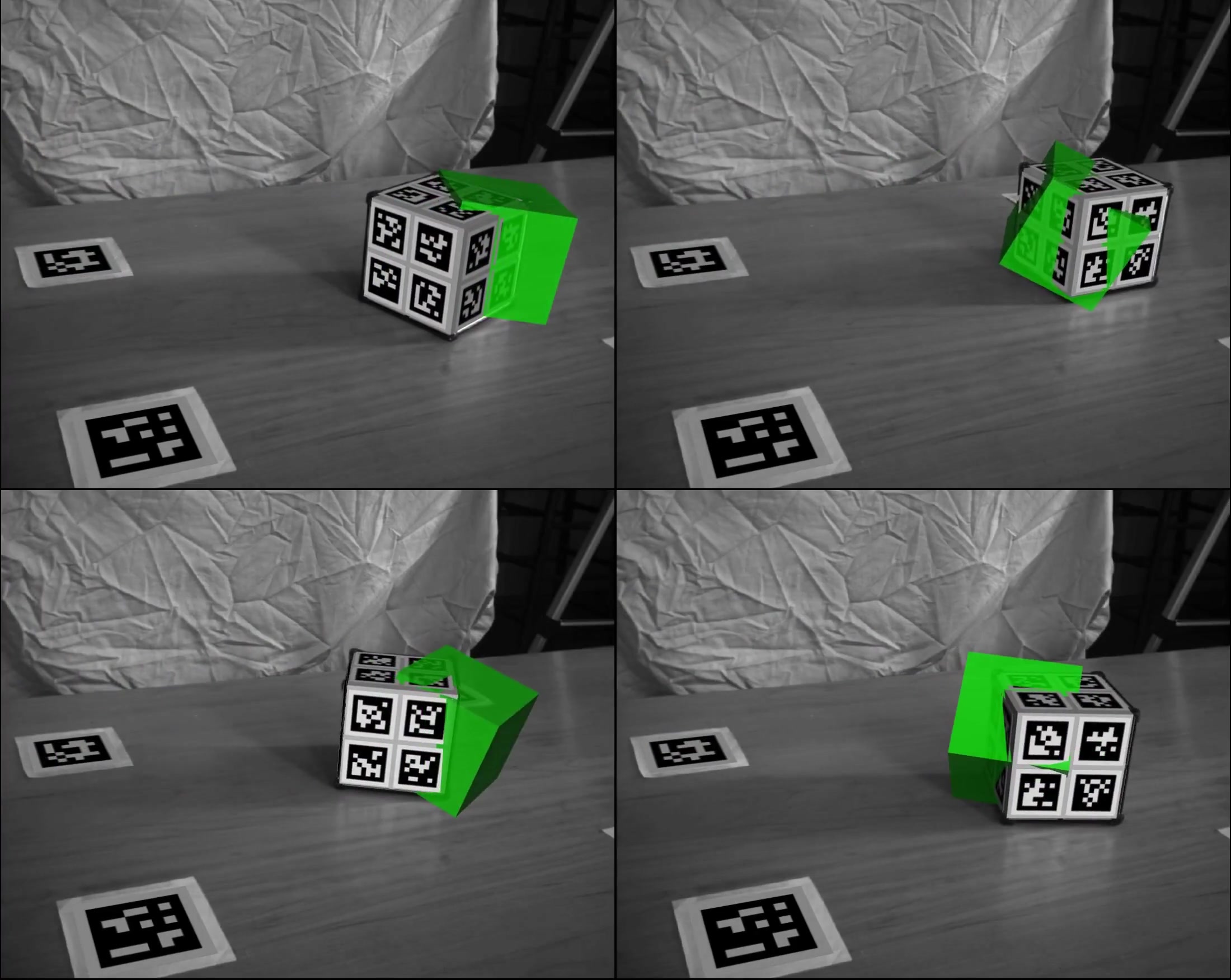

Physics-based implicit learning. My group has made significant progress towards resolving the primary challenges in learning robot-object dynamics, developing learning frameworks explicitly designed to mirror dynamic discontinuities due to friction and impacts (Pfrommer2020) (Jin2022) (Bianchini2023). We have developed algorithms which maintain the flexibility and representative power in deep neural networks, but smoothly learn to represent the non-smooth dynamics inherent in robotics. Our approach requires only seconds of real-world data to identify the geometric (as a deep network), frictional, and inertial properties of objects tossed gently against the ground, capture the multimodal contact dynamics and dramatically outperforming naive deep learning and methods based on smooth contact modeling (e.g. differentiable simulation).

Optimal reduced-order models

Simple, or reduced-order, models are widely used as templates for complex robotic systems. By greatly reducing the dimension of the state and action spaces, use of simple models enables robust, real-time control and a broad range of analytic tools. For legged robots, these models have historically taken the form of center-of-mass representations. My group has pioneered algorithmic approaches to find the best, task-relevant simple models (Chen2020) (Chen2023b) (Chen2024). We formulate an optimization problem to find the best simple model capable of a set of tasks, solved via bilinear optimization, combining stochastic gradient descent with trajectory optimization or reinforcement learning. The goal is to synthesize a model that achieves low cost across a distribution of tasks when deployed in a real-time planning framework. This research promises to fill in the gaps left by hand-designed center-of-mass models, improving the energy efficiency and dynamic capabilities of bipedal robots by blending simplicity, necessary for real-time planning, with high performance.

Hybrid reduced-order models for dexterous manipulation

In dexterous manipulation, the vast number of potential hybrid modes, more than the state-space dimension, is a key driver of computational complexity. Based on our observation that far fewer of these modes, corresponding to contact configurations, are actually necessary to accomplish many tasks, my group has made significant progress at the intersection of non-smooth model learning, real-time control, and model-order reduction to automatically identify reduced-order hybrid models (Jin2024) (Bui2024). The resulting approach finds approximations which reduce the mode count by multiple orders of magnitude, while achieving near-optimal performance when combined with model-based control. Applied to dexterous manipulation, combining model-learning with both model-based and model-free reinforcement learning, the method achieved state-of-the-art closed-loop performance in less than five minutes of online learning.

Looking Forward

As I look toward the next five to ten years in the field, it is impossible to ignore the large wave of interest and excitement coming from the use of large data and associated foundation models. In robotics, this wave has been accompanied by industry investments at a scale never before seen in general-purpose robotics. Industry, both well-established companies like Amazon, Meta, and Google, and newer startups, are well-positioned to focus on the issue of scale, with resources (both human and and computational) that far outstrip those in academia. Given this, I believe that it is imperative that academic researchers focus on research topics which complement the emerging capabilities from big data, but address current and future shortcomings.

This current wave is already driving substantial advances in robotic hardware, increasing capabilities and decreasing costs, but I am skeptical that the promised level of autonomy will be realized in the next few years. While current data-driven approaches are capable of generating impressive laboratory demonstrations, fundamental questions remain before we can deploy robots into realistic environments that inevitably push them outside their training distributions while requiring high degrees of safety and reliability. In my lab, we will continue our focus on low-data learning in combination with control, to enable robotic capabilities in novel or out-of-distribution settings. I also intend to renew our research on more formal methods and analysis, combining theory and numerical algorithms to derive performance or safety guarantees for robots performing dynamic tasks in close proximity to humans. Beyond these existing lines of research, I believe that autonomous robots capable of executing complex, multi-step tasks will require hierarchical strategies, but that existing control architectures are often brittle and sensitive to uncertainty and cascaded suboptimality. I intend to lead efforts, both in my group and in the broader GRASP and robotics communities, to better understand why these traditional architectures, so successful in other fields of control, have struggled in robotics. Armed with this understanding, we will aim to design the next generation of hierarchical strategies that provide both the expertise and capacity to generalize required for robots to succeed.